In order to get more of an insight into the pain points of the dining experience we interviewed 5 different types of dining scenarios: Fast Food, Cafe, Low-End Buffet, High-End Buffet and Fine Dining. Our interview goals were to understand different sales people in different dining scenarios and understand their needs, pain points and interactions in the workplace to deduce suitable uses for subtle AR gestures.

During the user interview stage, we discovered two major themes. Firstly, cashiers or waiters at different restaurants we interviewed all have the need for immediate information which can be provided by Google Glass, but the kind of information they needed are different based on context. For example, waiters at campus cafe sometimes forget the seating and orders of the customers when it gets too crowded, while waiters at high-end seafood restaurant need to stay updated with the long and frequently changing menu and the origins of different fish. For the purpose of fast prototyping and testing, we chose cashiers at fast food restaurants as our scenario, but other scenarios may have more complicated and urgent information needs, higher expectations of customers, and thus more in need of subtle gestures with AR applications.

Secondly, expectations of customers and waiters can be different. For example, while waiters at high-end restaurants can benefit from having the menu and customer information provided by Google Glass, customers who go to high-end restaurants may want to receive personable and professional services and can be disturbed by seeing waiters wearing Google Glass and making confusing gestures. This point is also why we decided to shoot the videos from both the customer and the cashier’s perspectives.

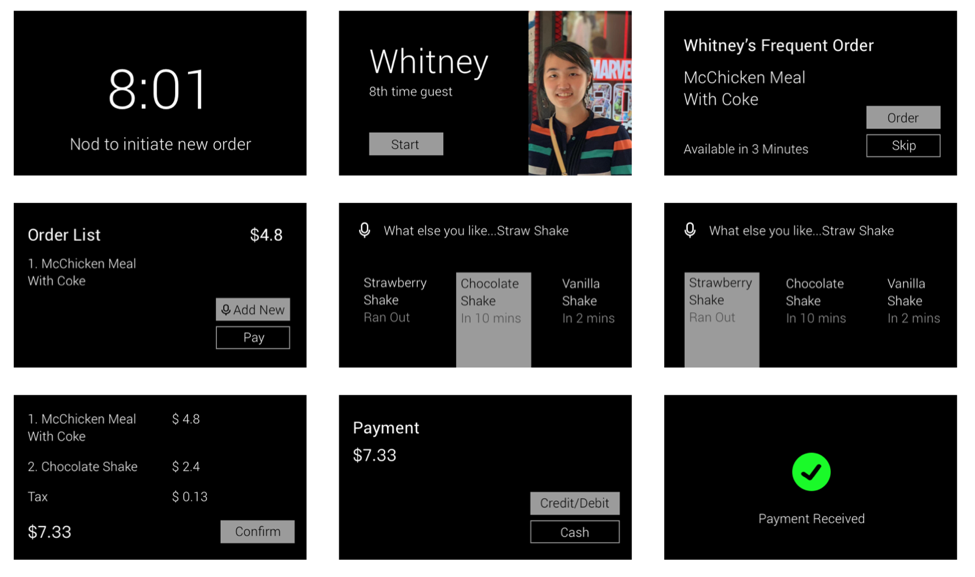

To decide what features are needed for the cashier to complete a smooth transaction without looking down or diverting his attention from the customers, we wrote down the key steps that a cashier needs to go through to place an order: greet customers; recommend food if they need; let them order food; check for storage; follow up with customers; complete payment.

Based on the identified steps, we discussed what information should be exchanged between the customer and the cashier, how it should look like on Google Glass interfaces, and what gestures are appropriate for different actions. Specifically, we decided to use self-sync gesture (head nodding and wrist twisting) for initiation, double tap on the ring for confirmation, swipe the ring for switching options, and long tap on the ring for cancellation.

The video production stage is also considered to be the pilot user testing of this prototype, where the actors who are intentionally not involved in the prototype design stage experienced the prototype from the cashier and customer’s perspectives. The student portraying cashier commented that he found the gestures used for the scenario are relatively intuitive and easy to learn. Furthermore, because cashiers usually stand behind the counter, it is easy to do gestures to control Google Glass without getting any notice.

However, the student portraying customer found her ordering experience not so natural. When she went to fast food restaurants, she expected the cashier to pause and look down to place her order, but this time the cashier was making eye contact with her all the time, which made her feel awkward and wondered if her order was placed successfully.

Furthermore, when comparing the video with previous observations of cashiers at fast food restaurants, we noticed that using subtle gestures with Google Glass can be hard for cashiers to chat with customers, because the interactions would be led by the AR interfaces and chats may disturb the speech recognition feature of Google Glass.

For this stage, we first interviewed McDonald's staff with our concept video from stage one to get their feedbacks.

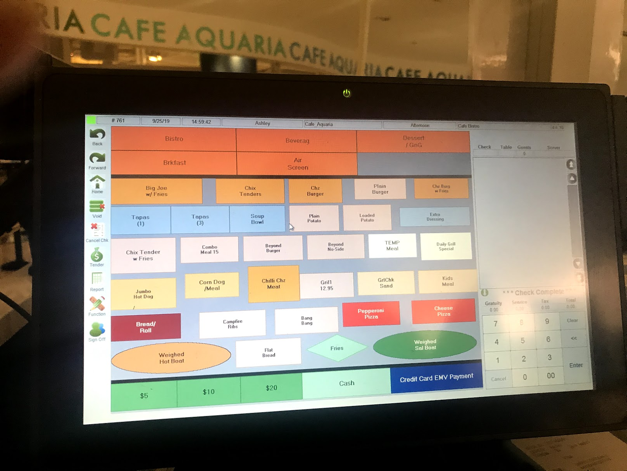

Afterwards, we redesigned our prototype. We conducted user testing with 8 participants to compare the traditional cash register vs. cashier using the head-worn display and self-sync gestures.

We recorded user behaviors and conducted survey data analysis to see which scenario has the better customer experience or more efficient for ordering.

Click here to read the report

More details about the process can be seen in the video.